4DSOUND: A New Approach to Spatial Sound Reproduction & Synthesis (2012-2016)

Paul Oomen, Poul Holleman, Leo de Klerk

category:

Paper

date:

November 2016

author:

Paul Oomen, Poul Holleman, Leo de Klerk

published by:

Living Architecture Systems Group, Spatial Sound Institute

Paper

date:

November 2016

author:

Paul Oomen, Poul Holleman, Leo de Klerk

published by:

Living Architecture Systems Group, Spatial Sound Institute

This white paper sets forth the fundamental principles of the 4DSOUND system and its surrounding technological infrastructure. To further the exploration of spatial sound as a medium, the authors argue that the generally applied methodologies to produce spatial sound are inherently limited by the sound source, i.e. loudspeaker boxes or headphones, and that reproducing or synthesising sound spatially asks for a new approach with regards to the medium. Conclusively it is stated that it is possible to create an innovative and non-conventional sound system that removes the localisation of the sound source from the equation, regardless of the listener’s individual hearing properties.

The resulting 4DSOUND system provides for a social listening area and improved loudness at equal acoustic power. The system is backwards compatible with existing audio reproduction formats, allows integration with a wide variety of control interfaces and encourages new approaches in design.

The paper has been presented by the authors in earlier iterations in front of the AES in Amsterdam (December 2012), INSONIC Conference at ZKM Karlsruhe (November 2015) and the Living Architecture Systems Symposium (November 2016).

It has been originally published been published as part of Living Architecture Systems Group: White Papers.

It has been originally published been published as part of Living Architecture Systems Group: White Papers.

Introduction

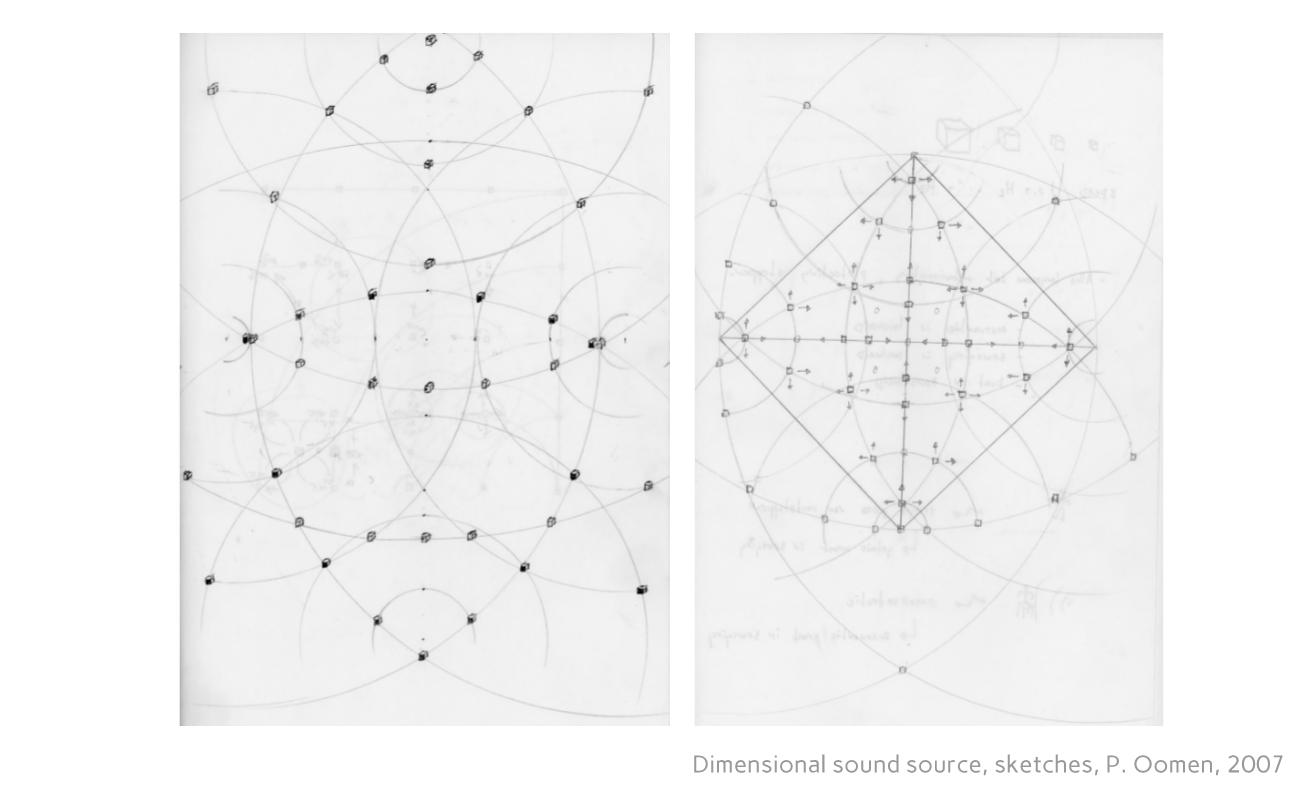

Early 2007, Paul Oomen conceptualised the design of a sound system that would be able to produce dimensional sound sources in an unlimited spatial continuum. Since this time Oomen has worked with Poul Holleman, Luc van Weelden and Salvador Breed on the development of object-based processing software and control interfaces for the system.

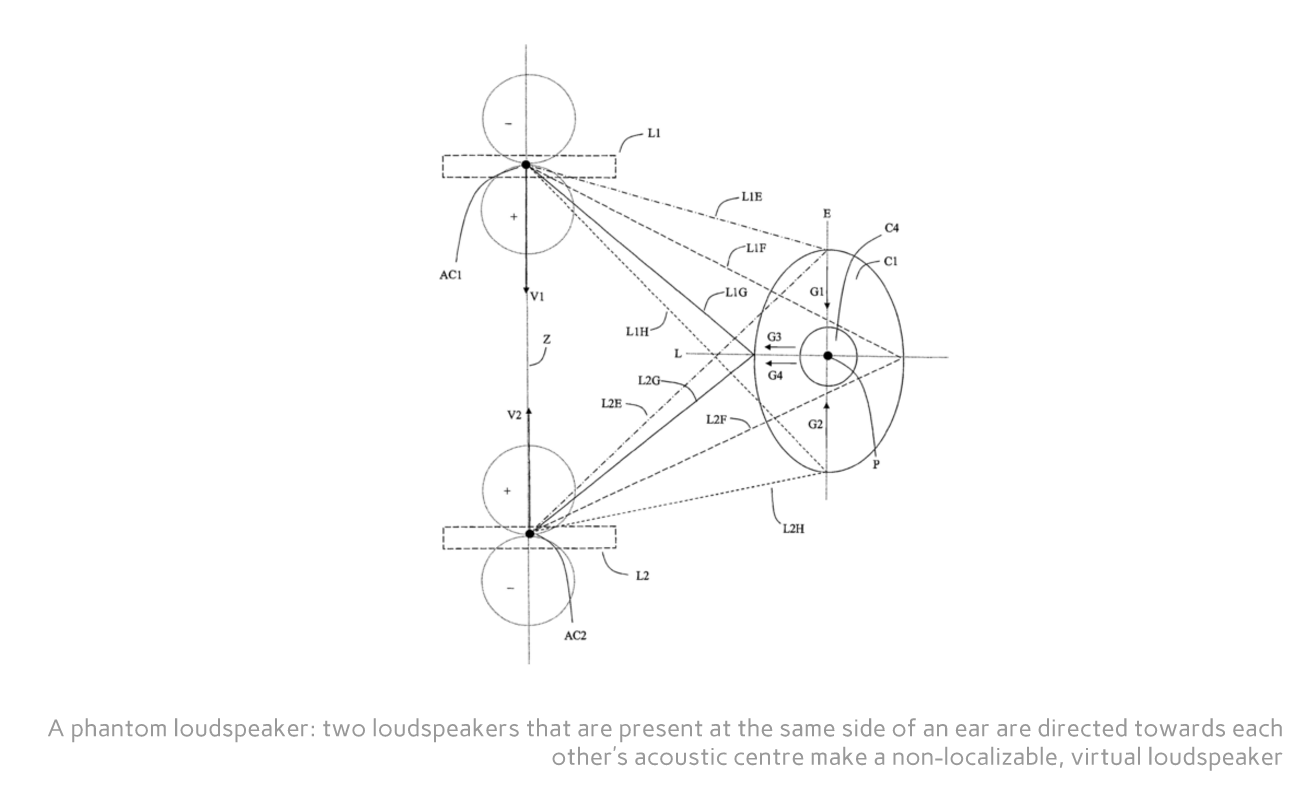

Independent from Oomen, but in the same year, Leo de Klerk made public his patent application of an omnidirectional loudspeaker that in configuration produces coherent phantom images in both the vertical and horizontal plane (int. Patent no WO2006100250 A transducer arrangement improving naturalness of sound, 2007). Since 2010, Oomen, Holleman and de Klerk work together on the development of the system.

The 4DSOUND system has been firstly presented to the public and in the presence of the authors et al. during Amsterdam Dance Event at the Muziekgebouw aan het IJ, October 2012, and has since been showcased in the Netherlands, Germany, France and Hungary in association with a diverse range of artists from the field of electronic music, sound art and immersive technologies. The system has been subject of multiple lecture presentations, written publications and film documentaries.

The article will at first outline the fundamental principles of the system by looking at a fallacy of spatial sound reproduction as can be derived from the theories by scientists Von Bekésy and Moore, and present a new approach to this problem by the authors. We will then continue with an overview of the technical implementation of the system, which incorporates the design of new hardware, software and interactive interfaces. Finally, we will present our conclusions with regards to the current status of artistic and technical achievements of the system and emphasising the development of the system as a discourse and platform of in its own right.

Fundamental principles

A. Fallacy of spatial sound reproduction

We are familiar with Von Bekésy’s problem (Experiments in Hearing, 1960): the ‘in the box’ sound effect seems to increase with the decrease of the loudspeaker’s dimensions. In an experimental research on the relation between acoustic power, spectral balance and perceived spatial dimensions and loudness, Von Bekésy’s test subjects were unable to correctly indicate the relative dimensional shape of a reproduced sound source as soon as the source’s dimensions exceeded the actual shape of the reproducing loudspeaker box. One may conclude that the loudspeaker’s spatio-spectral properties introduce a message-media conflict when transmitting sound information. We cannot recognize the size of the sound source in the reproduced sound. Instead, we listen to the properties of the loudspeaker.

Why does the ear lock so easily to the loudspeaker characteristics? Based on the hypotheses of Brian Moore (Interference effects and phase sensitivity in hearing, 2002) et al., we may conclude that this is because the ear, by nature, produces two dimensional nerve signals to the brain that reflect the three-dimensional wave interference due to direct interaction of both the ear’s and the physical sound source’s spatio-spectral properties. Therefore any three-dimensional spatio-spectral message information embedded in the signal to be transmitted is masked by the physically present media information related to the loudspeaker’s properties. One could say that in prior art solutions the input signal of the loudspeaker system merely functions as a carrier signal that modulates the loudspeaker’s characteristics.

We experience a phantom sound source reproduced by a stereo-system to appear more realistic than the same sound reproduced from one box. However, spatial sound reproduction by means of stereophonic virtual sources is only a partial solution because it fails for any stereophonic information that does not meet the L=R requirement for perfect phantom imaging. In fact stereophonics is no more than improved mono with the listening area restricted to a sweetspot.

B. A phantom loudspeaker

For a new approach of this problem we considered that one better could overrule the monaural spatio-spectral coding than trying to manipulate it (as f.i. in crosstalk suppression), thus preventing interaural cross correlation which is the root mechanism for the listener’s ability to localize any non-virtual sound sources i.e. loudspeakers.

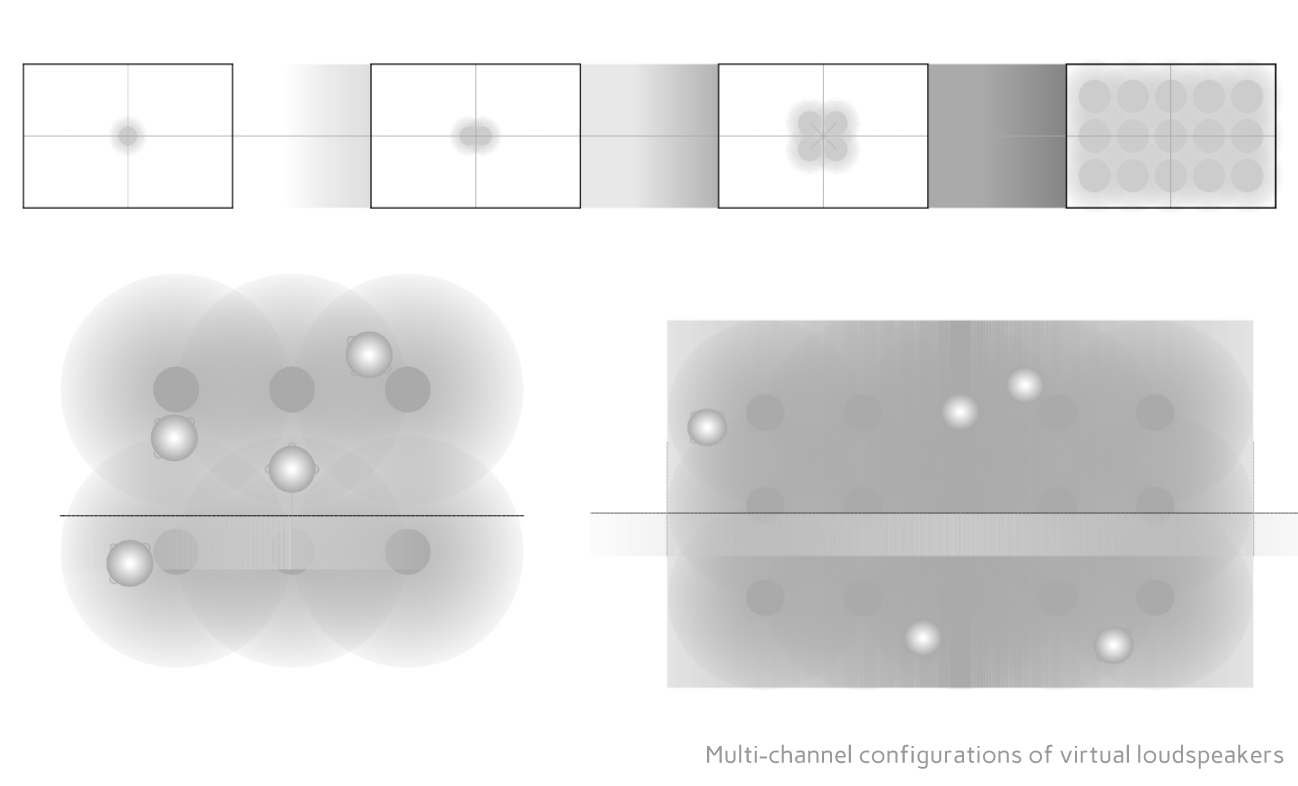

The resulting application describes a sound system that produces coherent vertical phantom images that cannot monaurally nor binaurally be decomposed to their root sources i.e. the actual loudspeaker drivers. The loudspeaker becomes audibly non-localizable and any multi-channel horizontal configuration of these virtual speakers is possible without adding masking media properties. As a consequence the listening area is not anymore restricted to a sweetspot. Loudness perception is dramatically improved because the ‘out of the box’ sound screen will now fit to even the largest sound source shapes.

Technical Implementation

A. A rotation symmetrical response

For the production of a coherent vertical phantom image that also must be able to interact coherently in the horizontal plane in order to allow multi-channel configuration, a driver structure with a rotation symmetrical off-axis response is a main requirement. The loudspeakers are constructed from modified co-axial drivers, where the modification consists of an altered implementation of existing driver parts and does basically not need re-engineering of the chassis. However, the modification offers the advantage to implement further refining features, f.i. mass-less motional feedback sensing, that were difficult to apply in the original design.

B. An unlimited spatial continuum

From this application derives the choice for a right-angled and equal spaced speaker configuration expanding in the vertical and horizontal plane to enable balanced sound pressure throughout the entire listening area. In this configuration we define the ‘inside speaker field’ and ‘outside speaker field’ marked by the physical borders of the speaker configuration, that in conjunction provide an unlimited spatial continuum, both incorporating the active listening area and expanding beyond it.

The spatial continuum is defined in Cartesian coordinates X) left/right Y) above/below, and Z) front/back in which coordinate [0,0,0] becomes automatically in the center of the listening area on floor level. This infinite and omnidirectional sound screen can now be used to position dimensional sound sources of various geometrical shapes such as points, lines, planes and blocks, both inside the speaker field, in between and next to to the listeners, and outside the speaker field, above, beneath and around the listeners at unlimited distances

C. Object-based processing software

The object-based processing software is written in C++. The software accepts discrete sound inputs and processes them according to spatial data that it receives. More than two-hundred of its data parameters are dynamic and can be addressed via OSC (Open Sound Control).

The software defines sound sources, reflecting walls and global transformations of the spatial field such as translation, rotation and ploding. The spatial synthesis can be divided in two parts: i) mono processing on the input signal according to virtual spatial properties, that is consecutively converted to ii) multi-channel processing which treats each speaker output independently.

For initialization the software needs the speaker configuration properties, such as dimensions and spacing of the speakers, and the amount of active sources, whereas a source is a serial circuit of mono modules that synthesize spatial phenomena like distance, angle, and doppler. The multi-channel conversion happens at the end of this chain and translates the sound source’s spatial properties like position and dimensions to speaker amplitudes and delays. Its algorithms are based on matrix transformations and vector math. Next to amplitudes and phase delay times is also processes interactive data, such as whether a source is located behind or in front of a virtual reflecting wall or not.

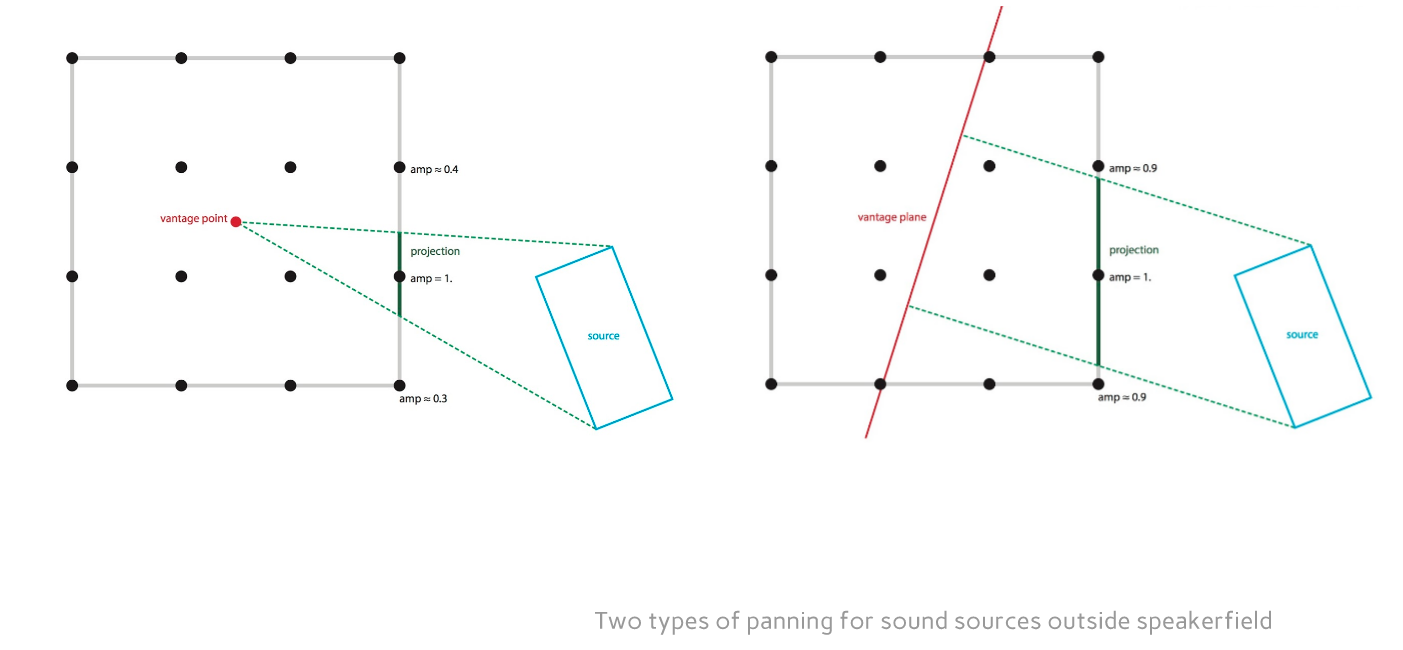

In the multi-channel processing, two panning algorithms are active, one for sources inside the speaker field and the other for outside the field. The inside speaker field panning is based on the shortest distance between the dimensional sound source and the speakers. The outside speaker field panning is based on the shortest distance between the projection of the sound source on the speakers defining the edge of the speaker field. The outside speaker field projection knows two types: ‘perspective-panning’, which takes a vantage point, typically the centre but can be any other coordinate inside speaker field and ‘right-angled panning’, which takes a vantage ‘line’ through any point, typically the centre, resulting in wider projections as it not diminishes with a longer distance.

From the implementation of loudspeakers with rotation symmetrical response arranged in an equally distributed speaker configuration and driven by object-based processing software, results a 4DSOUND System as an integrated approach.

Processing Modules and Interfaces

The 4DSOUND System describes processing modules that allow control over the following aspects of sound:

- Positioning of sound sources in an unlimited spatial continuum.

-

Sculpting the physical dimensions of sound sources.

-

Composing spatial movement trajectories of sound sources.

-

Generating spatial movements of sound sources through physical modelling, wave shape modulations, algorithmically driven gestures or responsive behaviour.

-

Spatial sound perception, such as the perception of distance, elevation, angle, speed, phase shifts and diffusion of the sound source in space.

-

Sculpting spatial acoustics by designing virtual walls, their distance, depth and material characteristics.

-

Global transformation of the spatial field, such as transposing, rotating, ploding and folding multiple sound sources at the same time.

- 4DSOUND Engine

The 4DSOUND Engine forms the core of a 4DSOUND system configuration. The Engine is running a dedicated multi-channel distribution protocol called 4d.pan. The protocol deals with the translation of dimensional sound sources and the interaction between objects to multiple loudspeaker outputs.

4DSOUND is an object-based sound system. In practice, control of the system does not principally deal with the distribution of sound to multiple speakers, but users are encouraged to think about space as a continuum of physical dimensions and distances - not limited by the physical constraints of the room you are in and independent from the positions and amount of speakers used. During a working process in 4DSOUND, the Engine is a stand-alone processor that is passively handled by the user for the monitoring of audio levels, data flow and performance.

B. 4DSOUND Animator

The 4DSOUND Animator is a software application that animates and visualises the sound sources in space. It contains modules for generative movements, scripts for movements trajectories, swarming algorithms and complex shapes for multiple-point delay patterns. These processes are all generated using visual algorithms and then communicate the resulting spatial sound data to the Engine.

The Animator contains a dedicated editing and drawing function for composing paths - trajectories of the sound sources moving in space. Paths can be edited in real-time including changes in speed and dimensionality of the sound source along the course of a trajectory. Paths can then be exported as scripts and applied inside a composition or live-performance with 4DSOUND. The editing of paths and generating other spatial processes in the Animator can be done both online and offline from the 4DSOUND system.

The Animator is used on the one hand as a physics engine for modelling complex spatial processes, and functions as a visual monitor of sound activity in space. The Animator shows the dimensionality of sound sources relative to the size of the speaker configuration one is working with. It shows the real-time transformations that are applied to the sound sources, such as the shape of the spatial movements, changes in dimensionality of the sound sources and the architecture of virtual walls that reflect and dampen the sound.

C. Open-End Spatialisation Format

4DSOUND has been constructed as an open-end spatialisation format that also allows to be controlled by custom-built and third-party software.

Resulting from the accessibility of the format, a diversity of approaches to interfacing with a 4DSOUND System has emerged. The approaches range from gestural control of sound in space using LeapMotion and Arduino sensors, devices that measure bio-physical and brain-wave data to establish a direct connection between the inner body and the outer space, real-time tracking systems of people moving in the space and custom-built instruments in softwares such as Max/MSP, Supercollider, PureData, Processing and Kyma to control 4DSOUND’s spatial properties.

D. Connectivity

All parameters of 4DSOUND are communicated via OSC (Open Sound Control). The control of the system therefore allows easy integration with other software and hardware controllers, such as music production software, mobile media apps and real-time tracking systems.

The OSC data structure of 4DSOUND can also been used as a versatile way to talk back and forth between different platforms. 4DSOUND’s OSC platform provides a hub where incoming and outgoing data from multiple devices and softwares are shared on the same network, and allows to scale and smooth data flows to parameter ranges. As such, direct communication between a 4DSOUND System and game engines, virtual reality devices and visual systems can be established.

E. Control in Ableton Live

A set of Max4Live devices provides integral control of the 4DSOUND system inside Ableton Live. Inside a Live set, every audio channel can be linked with a corresponding midi-channel that communicates the spatial sound data (OSC) to the 4DSOUND Engine. All parameters of the system can be automated on a timeline using Live Arrangement View. This mode of working integrates extensive spatial sound control into the established workflow of editing and mixing a music composition or soundtrack.

Live Session View allows spatial sequencing by utilising midi-clips as spatial sound scripts. Spatial data can be stored and recalled by writing XYZ coordinates or path_names into clips. The clip’s start, stop and loop-options can be used to synchronise the speed and scale of spatial movements to fit the timing of audio clips. The clips also allow the option to automate any parameters of a sound source linked or unlinked from the clip’s time selection.

The options for working in Live Session View are extended with a dedicated system for storing and recalling of spatial sound settings from the clip (Instants). The Instant file allows users to create their own libraries of pre-composed spatial attributes, movements and processes and to recall these settings at any given moment and place within a Live Session.

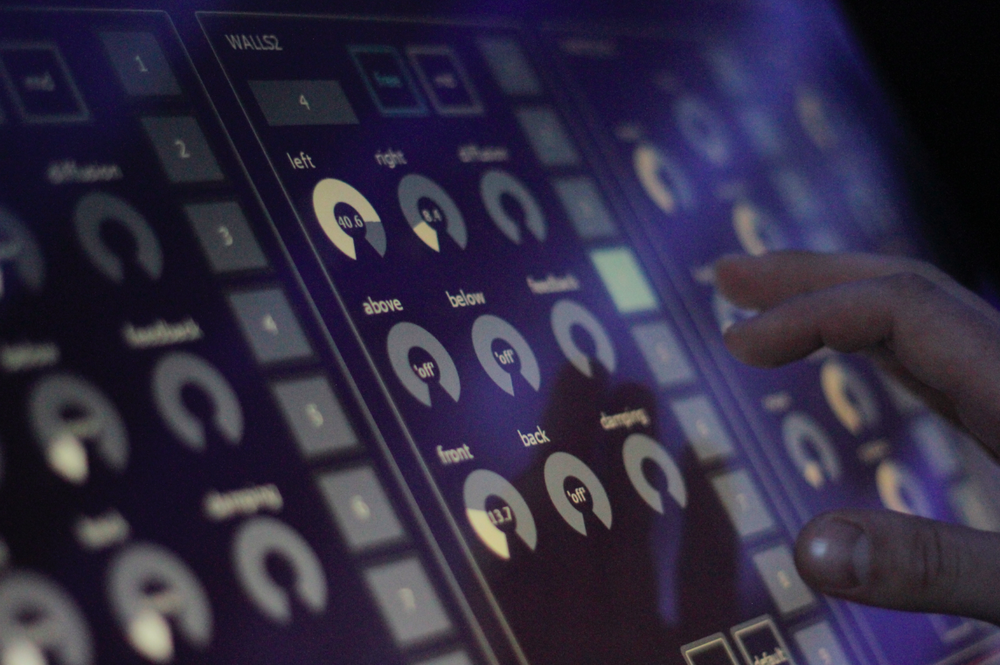

F. iPad and Touchscreen Control

An customizable template for iPad control has been developed using Lemur and its extended programming language Canvas. Such controls have also been ported for a 24” touchscreen using Canvas for Android devices. Lemur’s integrated Physics Engine offers extension to generate spatial movements directly from a surface control, allowing modelling of the speed, friction and tension of moving objects, slides and strings.

The iPad control of a 4DSOUND System was initially conceived as a remote control of Live, including pages to trigger scenes and clips within Live, control selected audio effects and spatial effects. This enables the performer to move freely through the space while performing on a 4DSOUND system. In practice, many variations on the Lemur template interfaces have been developed that allow a completely self-sufficient control system from a iPad or Touchscreen surface, independent from other control software.

The standard 4DSOUND template includes, control of global position, ploding and rotation of the spatial field, spatial delay effect, live sculpting of spatial acoustics and dedicated modules for controlling swarming behaviour of multiple sources.

The control template in Lemur/Canvas has also been applied to prototype the development of custom hardware controllers, such as the Space Control 01 and the TiS 0.9.

G. Custom Hardware Controllers

The dedicated hardware controller Space Control 01 further extends the chain of controls, and can be used in sequence with or independent from a Live set and Lemur control. This device is tailored for manual performance of eight sound sources in space. The joysticks are used for the horizontal positioning and side wheel for the vertical positioning of each source in space. The wheel below allows to change the physical dimensions of each source. Each source is equipped with an additional knob which sends the source to receive reverberation from virtual walls.

The Space Control 01 is communicating OSC and the addressing, value and scaling of its controls can be customized within the 4DSOUND Engine. Space Control 01 was commissioned by Stimming and custom designed for his live show using a 4DSOUND System. The hardware was built by Studio360 in Austria.

The TiS Control 0.9 is a prototype for a 24” touchscreen interface designed to provide artists more direct access to the possibilities of space in their sound production and performance. Designed as a stand-alone spatial sound controller to perform on the 4DSOUND system, it caters to the immediacy of a DJ-mixer but at the same time strives to integrate extensive spatial control options within a compact and accessible format.

The TiS Control 0.9 contains eight channels that are used to mix live on the 4DSOUND system. Instead of controlling the output volume of an audio channel, the distance of a sound source is controlled which indirectly regulates volume perception in combination with positional information within the spatial field. EQ balancing of an audio channel is handled by changing the shape and dimensions of the sound source. The controller streamlines control parameters in a 4DSOUND System to 4 dedicated spatial FX knobs per channel/sound source, enabling artists to play with key spatial attributes live during their sets, such as reverb, spatial delays, spatial movement paths and on-the-fly looping of spatial movements.

The controller was designed for the curatorial programme 4DSOUND: Techno Is Space.

H. Real-time Position Tracking

Integrated in the system is a real-time position tracking system based on UWB-frequency (Ultra Wide Band) using Ubisense sensors and tags. The sensors map the physical space and trace tags that can be carried by people moving in the space. The 4DSOUND software maps the real-time position data of the moving bodies to position information that can be interpreted to move through or transform spatial sound images.

The position tracking system solution has been used in various projects created in 4DSOUND. In the opera Nikola, singers carry their amplified voices with them as dimensional sound sources as they physically move through the space, providing for a natural sounding and localisable support for the acoustical carrying of the voice. Performance artist and composer Marco Donnarumma<link bio page> used the position tracking interface in his work 0:Infinity<link project page> to capture three visitors in a system of sound processing based on the visitor’s spatial positions, distance towards each other and behavioural derivatives, such as the attraction visitors developed to each other over time. The position tracking system has also been used to create an interactive spatial sound remake of the well-know videogame Pong.

I. Motion Capture and Gesture Control Systems

A variety of applications have further extended the physical control of the 4DSOUND system with motion capturing technology - mapping various controller-data of the way the body moves in space to OSC messages for the spatial control of sound.

Multimedia-artist Rumex<link artist page> created an integrated spatial sound control by the movements of a dancer in her work Sonic Lure<tag project page>. She used Kinect video motion capture to translate gestural qualities of the body to move sounds in space and RiOT sensors for capturing the orientation of the limbs and body to control rotations in the sound field. Composers and live-electronic performers Matthieu Chamange and Herve Birolini performed using two LeapMotion sensors and a motion-sensitive Wii-controller to perform the spatial behaviour of twenty-four sound sources with their hands and fingers.

J. BIO-PHYSICAL AND SENSORY FEEDBACK SYSTEMS

A variety of new interfaces and control applications have been developed that establish a direct connection between the inaudible states and transformations of the inner-body and the audible space around us.

The XthSense was a bio-wearable instrument integrated with the 4DSOUND System to captures inaudible sounds of internal body of the listener, like the heartbeat, muscle tension and blood flow. The pitch, texture and rhythm of those sounds can be interconnect with spatial behavioural attitudes.

In the work Body Echoes, techno-artist Lucy captured the inner movements in the body of yoga-teacher Amanda Morelli<link bio page> through a system of custom-built Arduino controllers and close-up microphone capturing of Morelli’s breath and heartbeat, and translated the raw data from Morelli’s body into corresponding spatial sound images following the energy of the audible breath of Morelli in space.

Lisa Park developed communication between commercial EEG brainwave headsets and the 4DSOUND System through a custom-built smartphone app that translated the real-time brainwave data into OSC messages for spatial control of sound.

Conclusion

In everyday life people process complex spatial sound information to interact with their surrounding, mostly involuntarily. We are exposed to continuous movement in the environment around, above and beneath us. And in this environment we move ourselves in complex patterns, sometimes fast and straight, then slow and hesitant, turning back and forth, possibly laying, standing, bending or sitting. We are continuously affected by changes of movement surrounding us, and by our patterns of behaviour we influence our perception of this environment and stimulate movement in the environment itself. To be able to further our explorations with spatial sound as a medium, it is essential that the reciprocality between the listener the sounding space is restored to achieve lifelike and meaningful experiences of spatiality.

Conclusively we may state that it is possible to create an innovative and non-conventional sound system that removes the localizability of the sound source from the equation, regardless of the listener’s individual hearing properties, and improves loudness perception at equal acoustic power. The system provides for a social listening area, is backwards compatible with existing audio reproduction formats, allows integration with a wide variety of control interfaces and encourages new approaches in design.

—

About The Authors

Leo de Klerk (1958, NL) is tonmeister, composer, founder of Bloomline Acoustics and inventor of Omniwave, the inaudible loudspeaker.

Poul Holleman (1984, NL) is sound technologist, software developer, co-founder and lead software of 4DSOUND.

Paul Oomen (1983, NL) is composer, founder of 4DSOUND and head of development at the Spatial Sound Institute, Budapest.

Related:

‘Integrating Sound in Living Architecture Systems’ (2018) [PUBLICATIONS]

‘Integrating Sound in Living Architecture Systems’ (2018) [PUBLICATIONS]