Composing in 4DSOUND (2017)

Svetlana Maras

Following the author’s residency at the Spatial Sound institute in December 2016, Maras shares her observations on environmental listening, how working with space can be approached from a compositional point of view and outlines some of the technical possibilities and choices that creating on the 4DSOUND system provides.

Listening in 4D

Contrary to my expectations and to my huge surprise, at first listening I wasn’t particularly fascinated by the sound of the 4D system, as it felt very familiar somehow. The crucial shift in perception happened the moment I was on the other side of it - not as a listener but as a creator, performer, having the chance to influence the sound events in the space around me, and to be able to observe the effects of this on a monitoring screen. Only then I started gathering that something genuinely new has happened and I was instantly captivated. This situation struck me and kept me thinking about this experience.

Listening in 4DSOUND is somewhat like listening to our everyday environment - sound events occur at different locations and each event has innate sonic properties (e.g.timbre, structure, pitch, volume), as well as acoustic ones that are attributed to it by the space itself - mass, dimensions and reflections that tell us how reverberant the space is. Events appear and disappear in space, they have specific duration of presence. And, contrary to how we most often listen to music, there is no sweet spot - the listener’s position influences many factors of the soundscape and how it is being perceived, just like in real life. The properties of the 4D system create remarkable resemblance with our common (everyday) listening environments - the space is wide and empty, the speakers are hardly noticeable and they don’t obstruct your movement in the space in any way. They are placed high enough above your head and low enough below your feet to keep the pathway completely clear. Unlike in our everyday listening environments where sounds are associated with objects in space or beings, we are unable to point the finger to the source of sound. The monitoring system however, allows us to have visualisation of sound events in animated 3D environment on the screen.

The thin line where everyday listening breaks into the artificial and miraculous world of 4DSOUND, is the moment you access the 4D system and are enabled (by the technology) to manipulate the soundscape in musical or even purely spatial means. How often is the sound of traffic unpleasantly loud, and you’d wished you could mute those sounds, or your neighbour’s children jumping in the apartment above that made you want to be able to turn the bass frequencies down. Or, an accidental resonance of your fridge in the night hours sounded so good that you’d want to loop it forever? 4DSOUND provides you to some extent a visionary and utopian possibility to be the master of your acoustic environment and to adjust it to your own wishes.

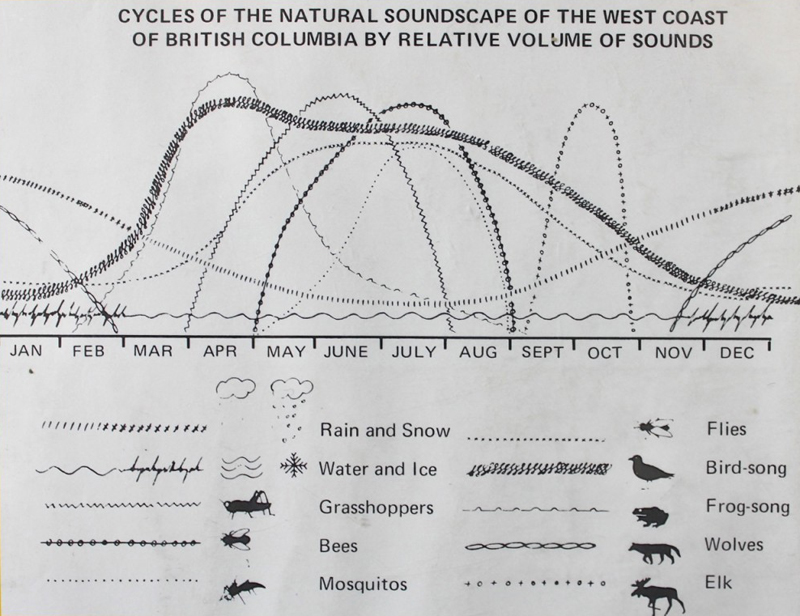

From the rich history of 20th century music and especially from the field of acoustic ecology, deeply and thoroughly investigated by the group of researches at Simon Fraser University in Canada, we have learnt how to listen to subtleties of our worldly soundscape and to appreciate them for their musicality and the diversity of information about our environment that they can provide us. Inescapably, the ear ‘tuned to the soundscape of the world’ examines these sounds through their spatial relationships and even more, recognises the effect of the environmental acoustics to these sounds and their relations. Sound and space are profoundly fused and we (consciously or subconsciously) perceive them in this coalition most of the time.

Diagrams of the sound ecologies occupying the environment of British Columbia, Canada, over a period of a year by Barry Truax

Diagrams of the sound ecologies occupying the environment of British Columbia, Canada, over a period of a year by Barry TruaxBy working with 4DSOUND, we are enabled to understand thoroughly these relations and to develop a more conscious perception of the sounds that surround us. Drawing from the everyday-life experience we can tell if the sounds are close, far away, if they are large or small, approaching us fast or passing by slowly, and so on. But exercising our hearing with 4DSOUND we can start to learn more about all the possible mutations of these sounds, their musical transformations through time and space and therefore our listening capacity develops and can enrich our everyday listening experience.

When thinking about 4DSOUND from a composer’s perspective, it’s worth mentioning another listening approach stemming from the ideas by conceptual artists proposed most notably in 1960s and 70s. The ‘cracks in the wall’ of the Western concert hall as a concept, instigated by the work and thought of John Cage and particularly manifested in the piece 4’33” (1952), highlighted the act of listening as an activity that can change, influence and create what one is listening to, as opposed to passively perceiving it. Yoko Ono’s conceptual pieces from the book Grapefruit (1964) exemplify this beautifully and poetically - events such as “the sound of stone aging” or “room breathing” should be recorded on tape.

Although having a deep musical quality, these works are not represented through sound, but through text (instructional score). Language is used to manipulate the sound not in musical but rather poetic and imaginative ways. The perception of these works in purely conceptual and occurs in the domain of inner listening (imagining the musical output).

![]()

![]()

From ‘Grapefruit’ - A Book of Instructions and Drawings by Yoko Ono

When thinking about 4DSOUND from a composer’s perspective, it’s worth mentioning another listening approach stemming from the ideas by conceptual artists proposed most notably in 1960s and 70s. The ‘cracks in the wall’ of the Western concert hall as a concept, instigated by the work and thought of John Cage and particularly manifested in the piece 4’33” (1952), highlighted the act of listening as an activity that can change, influence and create what one is listening to, as opposed to passively perceiving it. Yoko Ono’s conceptual pieces from the book Grapefruit (1964) exemplify this beautifully and poetically - events such as “the sound of stone aging” or “room breathing” should be recorded on tape.

Although having a deep musical quality, these works are not represented through sound, but through text (instructional score). Language is used to manipulate the sound not in musical but rather poetic and imaginative ways. The perception of these works in purely conceptual and occurs in the domain of inner listening (imagining the musical output).

From ‘Grapefruit’ - A Book of Instructions and Drawings by Yoko Ono

Listening in this case, becomes equivalent to consciously thinking about the sound, and it is as creative and active agency as composing or performing a piece. Such interpretation of listening as a conscious activity is the basis of sound walks as an artistic practice, famously employed by Max Neuhaus in the 1970s but also many other artists and researchers. These walks rely on the idea that the city soundscape is already a composition on its own and that the ear that hears and deciphers it, is the performer. In my work Armoniapolis, I have followed a similar path by using environmental sounds as a material for composing short instructional pieces. In this work, key-sounds should be identified in the soundscape and then combined or transformed by applying audio editing techniques (or other compositional ones), but the rendition of these works should be done by imagining the sounding outcome, rather than performing it by the use of technology. When one of the instructions says: “High-pass filter on people talking and distortion on the sounds of birds”, I am imagining an intervention on the sounds in the space around me that would be possible only if the technology would be brought into this space and used upon these sounds. The technology employed here however is solely the text.

Manipulating the sounds by using language exemplifies the compositional process, and reading these instructions exemplifies the process of listening.

Manipulating the sounds by using language exemplifies the compositional process, and reading these instructions exemplifies the process of listening.

From www.armoniapolis.com by Svetlana Maraš

From www.armoniapolis.com by Svetlana Maraš

These two different approaches to soundscape exploration are examples of how we can analyse and manipulate the sound world around us creatively. I think that the knowledge about environmental sound is an essential segment of the spatial sound techniques provided by 4DSOUND’s technology. By exercising our hearing and inner listening in spatial terms, we can reach for creating music beyond the existing mediums governed by the prevailing culture of stereo systems and the consumerist culture of headphones, bluetooth mono-speakers and embedded TV and laptop sound devices. Also, listening in 4DSOUND is an active process that completely influences the way compositions are being perceived but also instantly created. Each listening experience in 4DSOUND is a unique and participative exploration of the composition and such inclusive policy (if not ideology) behind the system, boldly manifests itself through the technology as well.

Technology

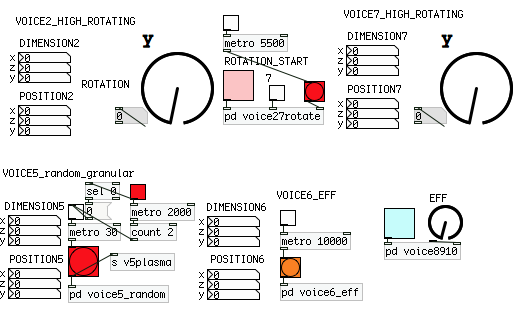

My plan for the residency was to bring a portable setup for live performance which consists of laptop, external sound card, tablet and MIDI controllers. In this setup, I use Ableton Live together with Pure data. Before coming to the Spatial Sound Institute, I made a sketch in Pd which consisted mostly of automated processes for sound positioning sending out OSC message path to be received by the 4D system. Following the correct paths and connecting to a given network port, it was a pleasant surprise to see my patches working instantly. The 4D system proved to be highly accessible - it didn’t require any piece of hardware or software from my side to invest in or learn about in order to connect to the system and I think this is an important quality which adds to the ideology of inclusion, mentioned earlier.

The user’s activity in the 4D system is twofold:

As source material I decided to work with my own sample library that I imported into Ableton Live. Each track in Ableton is interpreted as a source that can have many spatial parameters attributed to it. These parameters and their values are being defined through the OSC messages. There are two different ports that can be addressed - first one is the 4D.Engine and the second is the 4D.Animator which provides you with visualisation of your sounds in space on a monitor screen. First step is to switch on (in the animator) the “clip” that relates to the “voice”, and it will visually interpret the parameters of that sound that you are sending to the engine.

![]()

![]() Addressing the 4D Engine (left) and Animator (right) in Pd

Addressing the 4D Engine (left) and Animator (right) in Pd

In spite of the fact that I prepared to work with some complex sound materials, it turned out that even the most basic ones sounded very effective in the given context (in which the stereo division plays no role and each source input is essentially treated mono). I approached working with my material in 4D as if working in a completely different medium than usual, because my sounds didn’t have the same qualities in 4D space as in stereo (where I made them). Some aspects of the sound - such as dimension and movement for example - were now inseparable from other ones such as sound texture or envelope, and to create a sonically satisfying event took all these various factors to comply. Particular movements were adequate for particular sounds but inadequate for others. In this context, the sound acquired a sculptural quality and therefore demanded a compositional approach that I otherwise wouldn’t encounter when working in stereo, or even when composing for acoustic instruments.

4DSOUND comes with a complete set of plug-ins made for Ableton Live. However, I felt that a pre-conceived structure of control would already interfere with my compositional thinking and would be too suggestive in many ways. Using Pure data provided me an open framework to structure any parameter that I wanted to work with, and increased my flexibility and versatility in managing the value ranges of the parameters.

![]()

Technology

My plan for the residency was to bring a portable setup for live performance which consists of laptop, external sound card, tablet and MIDI controllers. In this setup, I use Ableton Live together with Pure data. Before coming to the Spatial Sound Institute, I made a sketch in Pd which consisted mostly of automated processes for sound positioning sending out OSC message path to be received by the 4D system. Following the correct paths and connecting to a given network port, it was a pleasant surprise to see my patches working instantly. The 4D system proved to be highly accessible - it didn’t require any piece of hardware or software from my side to invest in or learn about in order to connect to the system and I think this is an important quality which adds to the ideology of inclusion, mentioned earlier.

The user’s activity in the 4D system is twofold:

1) the user is providing sounds through his/her own equipment/instrument/setup

2) the sound is being controlled through OSC messages that are being sent to the system

As source material I decided to work with my own sample library that I imported into Ableton Live. Each track in Ableton is interpreted as a source that can have many spatial parameters attributed to it. These parameters and their values are being defined through the OSC messages. There are two different ports that can be addressed - first one is the 4D.Engine and the second is the 4D.Animator which provides you with visualisation of your sounds in space on a monitor screen. First step is to switch on (in the animator) the “clip” that relates to the “voice”, and it will visually interpret the parameters of that sound that you are sending to the engine.

Addressing the 4D Engine (left) and Animator (right) in Pd

Addressing the 4D Engine (left) and Animator (right) in Pd

In spite of the fact that I prepared to work with some complex sound materials, it turned out that even the most basic ones sounded very effective in the given context (in which the stereo division plays no role and each source input is essentially treated mono). I approached working with my material in 4D as if working in a completely different medium than usual, because my sounds didn’t have the same qualities in 4D space as in stereo (where I made them). Some aspects of the sound - such as dimension and movement for example - were now inseparable from other ones such as sound texture or envelope, and to create a sonically satisfying event took all these various factors to comply. Particular movements were adequate for particular sounds but inadequate for others. In this context, the sound acquired a sculptural quality and therefore demanded a compositional approach that I otherwise wouldn’t encounter when working in stereo, or even when composing for acoustic instruments.

4DSOUND comes with a complete set of plug-ins made for Ableton Live. However, I felt that a pre-conceived structure of control would already interfere with my compositional thinking and would be too suggestive in many ways. Using Pure data provided me an open framework to structure any parameter that I wanted to work with, and increased my flexibility and versatility in managing the value ranges of the parameters.

Extracts from Svetlana Maras’ Pd patch (top)

and 4D’s plug-ins for Ableton Live (bottom row)

There are particular ‘imperfections’ embedded into the system that can make it a faithful representation of the everyday listening environments. These imperfections are all thoroughly planned and programmed to create such effect, and they allow you to work creatively with them or, on the other hand, to avoid them simply by switching off their function in the engine. An example of this is a distance filtering which applies modification of the spectral range of the sounds if they are outside of the listening area (as if heard from behind the walls, outside of the studio). Another one is elevation filtering which does a similar thing in relation to the position of sound on the Y-axis (vertical) - being placed below your feet or above your head, the sound’s equalisation bands will differ. Another one of these ‘presets’ is the doppler-effect, so instead of programming pitch-bends yourself, they will result from a sound moving in space. Through OSC, you can then fine-tune some of the presets’ characteristics.

Svetlana Maras at work at the Spatial Sound Institute, Budapest

Svetlana Maras at work at the Spatial Sound Institute, Budapest

Composition

Working with 4DSOUND for the first time requires establishing the linkage between space, time, sound and visual representation on the screen. To grasp this fully, I chose to work with simple, repetitive sounds and mostly with linear and rotating movements. To some extent, I wanted to imitate the phenomena of physical things and objects in the 3-dimensional domain. A plastic explanation of this approach would be the following: for the object (sound) that is being stretched spatially, we expect its pitch to rise gradually and its spectral range to narrow and move towards higher bands; things (sounds) that are large, we expect to move slower or repeat less frequently than the smaller ones; for a sound that ascends vertically, we expect the pitch to rise, and so on.

When it comes to movement, to speak from the visual perspective, the process could be described as if I was putting an object into the room and then animated that object. Each object (sound source) had to be a singular, homogeneous event in musical terms and its transformation was caused by the spatial changes applied to it (and not vice versa). This ‘thingification’ of the compositional process made the link between the sensing of space, time and sound more comprehensible for me, while at the same time exposing the underlying musical process more explicitly to the listener.

It was my first encounter with the 4D system and rather than realising an already composed piece, I developed a work in dialogue with this system that I was getting to know. The resulting ’Two Kinetic Studies’ examine the laws of mechanical movement and corresponding spatial and sonic repercussions that emerge from it.

Each of the two studies consists of up to eleven objects. Each object always has the same spatial movement and the same starting position but not all of them are being active all the time. There are several ‘modes’ of playing each piece - they could be defined (programmed) by me, but could be open to the listener to activate and choose among them. Each mode is a combination of elements in which the objects have their own specific (musical and spatial) behaviours. The repetitiveness and the interdependence between the objects resembles a clockwork mechanism. In the Study no.1, linear movement along the X-axis prevails (horizontal back and forth movement), while rotation is the dominant movement in the Study no.2.

When it comes to movement, to speak from the visual perspective, the process could be described as if I was putting an object into the room and then animated that object. Each object (sound source) had to be a singular, homogeneous event in musical terms and its transformation was caused by the spatial changes applied to it (and not vice versa). This ‘thingification’ of the compositional process made the link between the sensing of space, time and sound more comprehensible for me, while at the same time exposing the underlying musical process more explicitly to the listener.

It was my first encounter with the 4D system and rather than realising an already composed piece, I developed a work in dialogue with this system that I was getting to know. The resulting ’Two Kinetic Studies’ examine the laws of mechanical movement and corresponding spatial and sonic repercussions that emerge from it.

Each of the two studies consists of up to eleven objects. Each object always has the same spatial movement and the same starting position but not all of them are being active all the time. There are several ‘modes’ of playing each piece - they could be defined (programmed) by me, but could be open to the listener to activate and choose among them. Each mode is a combination of elements in which the objects have their own specific (musical and spatial) behaviours. The repetitiveness and the interdependence between the objects resembles a clockwork mechanism. In the Study no.1, linear movement along the X-axis prevails (horizontal back and forth movement), while rotation is the dominant movement in the Study no.2.

Study no.1 is guided by the principle of symmetry. The central spot of the composition is in the middle of the room - half the way on both horizontal X- and Z-axis. Each object always moves through both halves of at least one of the X- or Z-planes. The element which is being doubled, is followed by the same-pace movement of the element on the opposite side of the axis, but the movement is being reversed: element no.6 positioned on Z-4 goes from X-5 to X+5 while at the same time in the same speed element no.7 at Z+4 goes from X+5 to X-5.

There are three elements which are the musical nucleus of the composition (1, 2, 3). These sounds are airy (noisy) but they have a recognisable pitch and form a minor triad. All other elements are tuned to these three elements which define the tonal center of the piece. This kind of interpretation of the harmonic aspect of the composition, parallels the interpretation of ‘symmetrical’ in spatial domain.

There are three elements which are the musical nucleus of the composition (1, 2, 3). These sounds are airy (noisy) but they have a recognisable pitch and form a minor triad. All other elements are tuned to these three elements which define the tonal center of the piece. This kind of interpretation of the harmonic aspect of the composition, parallels the interpretation of ‘symmetrical’ in spatial domain.

Study no.2 is based on the rotational movement of majority of elements, and this is also the only movement being applied globally to all elements at once, at one point in the composition. Being rotated, the objects’ acoustic characteristics change and the overall soundscape of the piece is in a state of flux all the time. I worked with sinusoid electronic sounds and the vertical aspect of the piece could be interpreted through a division of the frequency spectrum. Some elements covered the higher frequencies, some middle ones and a single element covers the (sub-)bass range. In this composition, the movement on the Y-plane has been used less statically than in the Study no.1.

Except programming each individual movement and behaviour of the object, I decided on four modes of each composition. Each mode consist of the specific combination of the elements, times of their activation and de-activation. These provisional decisions could be replaced by the audience’s own choice and different constellations could be made. My ‘studies’ - just like some of the sculptures by Jean Tinguely - are continuously working, repetitive machines, so which cannot be heard in their entirety, but are becoming audible segmentally, as one object sets the next one in motion.

For more information: www.svetlanamaras.com

Except programming each individual movement and behaviour of the object, I decided on four modes of each composition. Each mode consist of the specific combination of the elements, times of their activation and de-activation. These provisional decisions could be replaced by the audience’s own choice and different constellations could be made. My ‘studies’ - just like some of the sculptures by Jean Tinguely - are continuously working, repetitive machines, so which cannot be heard in their entirety, but are becoming audible segmentally, as one object sets the next one in motion.

For more information: www.svetlanamaras.com

Related:

‘Listening to Space’ (2016) [PUBLICATIONS]

‘Listening to Space’ (2016) [PUBLICATIONS] ‘RTS: Sound Spaces’ (2017) [MEDIA]

‘RTS: Sound Spaces’ (2017) [MEDIA]